Cloud computing has promised a revolution in scalability, reliability, and performance. With just a few clicks, developers can deploy applications that allegedly “auto-scale” to meet demand, ensuring that end-users get a seamless experience no matter the traffic spike. But what happens when those promises fall short, and users are instead met with frustrating 503 Service Unavailable errors? That’s exactly what happened when one development team trusted too much in their cloud provider’s auto-scaling magic—only to be blindsided by unexpected bottlenecks, buggy health checks, and lagging response times.

TL;DR

Auto-scaling is not a silver bullet. A development team relying on a cloud host’s auto-scaling capabilities started seeing 503 errors during peak loads. The root cause wasn’t insufficient resources, but health checks from a load balancer rejecting new instances before they could stabilize. Fixes involved adjusting health check rules and understanding the gap between theory and real-world behavior in cloud scaling.

The Illusion of Infinite Scale

It started out well: a horizontally scalable app hosted on a popular cloud platform. The auto-scaling groups (ASGs) were set up with generous thresholds, and the documentation seemed clear—new instances would spin up automatically whenever CPU usage or request volume exceeded predefined values.

For a period of moderate traffic growth, this worked as advertised. New virtual machines (VMs) spun up quickly, and users reported smooth experiences. But then came the product launch. Traffic surged beyond typical patterns, peaking unexpectedly. Initially, the team was confident; charts showed that new instances were spawning as expected. Yet users began reporting errors.

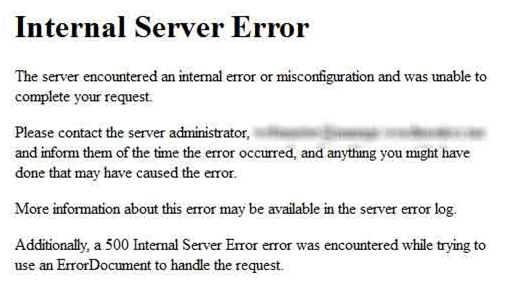

Specifically, the site returned HTTP 503 errors—the dreaded sign that a server is temporarily unable to handle the request. That’s when panic set in.

Scaling Happened, But Accessibility Failed

Digging into the logs showed a strange story. The ASG was indeed spinning up new instances in response to demand spikes. However, traffic was still being bottlenecked, and a major culprit turned out to be the health checks configured on the load balancer.

Cloud load balancers use health checks to determine whether an instance is fit to serve traffic. If a new instance fails these checks—even temporarily—it remains in an “unhealthy” state and is blocked from receiving traffic. In this incident, newly spawned instances were failing health checks for a crucial 60–90 seconds after booting because:

- The application needed time to initialize and load dependencies.

- Containerized services internally dependent on each other were not yet ready.

- The health check endpoint was overly strict and timed out too quickly.

The result? Instances were created but were never put into circulation fast enough. Even worse, because the number of available “healthy” servers was too low, traffic overflowed to existing ones—overloading them and driving more 503 responses.

Understanding the Load Balancer’s Rules

The health check logic was unforgiving. It required a 200 OK status within three seconds from the `/status` endpoint.

That might seem reasonable in most cases. But in a traffic surge situation where a server is initializing its application code, warming up caches, or waiting on slow cold-starts, even three seconds can feel like a sprint. A few mistimed retries, and the load balancer decides that the instance is “unhealthy.”

Autopsy revealed multiple misconfigurations, including:

- Health check endpoint queried before services were fully initialized.

- Strict health check timeout values (set too low).

- Retry settings insufficient for initial boot latency.

As a result, every time the cloud provider’s autoscaler attempted to scale up, it would spin up new capacity technically—but fail to use it effectively.

The Fix: Retraining the Load Balancer

The team’s fix began not with adjusting the auto-scaling policies, but with reconfiguring the load balancer’s expectations.

They made the following changes:

- Extended health check timeout: From 3 seconds to 10 seconds, allowing more time for new instances to respond after starting up.

- Increased unhealthy threshold: Letting an instance fail a few times before being marked unhealthy.

- Startup scripts delay gating: Health check port binding was moved after all services were ready.

- Warmed-up launch templates: Bootstrapping was optimized using pre-warmed VM images, drastically reducing cold-boot latency.

Once these changes were implemented, subsequent scaling events went much more smoothly. Instances passed health checks more reliably and were added to the load balancer faster, effectively absorbing unexpected traffic spikes.

Lessons Learned from the Scaling Illusion

This incident serves as a hard lesson in real-world cloud behavior. The team learned that while auto-scaling is a powerful feature, it’s only as effective as the operational setup around it—particularly how new resources are validated and brought into traffic rotation.

Auto-scaling is only as good as your slowest service dependency and most fragile health check. Moreover, the abstraction layers of managed services can be a double-edged sword. They offer convenience, but can mask important behaviors that require tuning to align with real-world use cases.

Best Practices Going Forward

To prevent similar issues, the team documented new internal guidelines:

- Implement proactive instance warming using launch templates.

- Always test health check endpoints under slow boot conditions.

- Use canary deployments to verify new server functionality before exposing to users.

- Track instance lifecycle hooks to control exactly when an instance enters the load balancer pool.

- Ensure observability with enhanced logging, APM, and metrics dashboards during scale-up periods.

Conclusion

Auto-scaling remains one of the most compelling features of cloud infrastructure. But it’s not a magic button. Its effectiveness is intrinsically linked to how capable your services are of warming up quickly and how tolerant your load balancing configuration is. Misalignment between these parts can turn auto-scaling from a hero into a hidden villain. Thankfully, with the right adjustments, teams can once again harness its power—this time with both eyes open.

FAQ

- What causes 503 errors during auto-scaling?

- 503s typically occur when servers cannot handle incoming requests. During auto-scaling, this can happen if new instances are spun up but fail health checks and aren’t added to the load balancer fast enough.

- How do load balancer health checks impact auto-scaling?

- Health checks decide whether an instance is ready to serve traffic. If new instances fail these checks due to slow boot times or strict response rules, they won’t absorb traffic, leading to overloaded existing servers.

- What are good practices for configuring health checks?

- Use a realistic timeout, allow multiple retries before marking an instance unhealthy, ensure your health check endpoint is resilient, and delay marking the port as ready until after full initialization.

- Can pre-warmed instances help reduce 503 errors?

- Yes, pre-warming—or using previously warmed-up images—can reduce boot times significantly, lowering the chances that new instances fail health checks during a sudden scaling event.

- Is auto-scaling always reliable?

- Auto-scaling offers dynamic responsiveness, but its success depends on how resilient and fast your application initializes. Poor configurations or delayed startup processes can undermine its strengths.